- how the “workflow” of product design should be harmonious and keep a relationship “to the tonic,” i.e. the objective of the tool or task remains clear;

- how jazz musicians innovated to create new musical approaches, as we innovate to create new software products and new approaches to solving business problems;

- how we try to minimum “boredom” in user interface design by minimizing needless mouse clicks, as musicians try to keep the audience engaged.

By way of example, I read the following comment in an email about music a while ago: “Turnarounds started when jazz players became bored with chords that lasted for two bars or more.” This made me laugh! As the joke goes, a rock musician plays three chords to 3,000 people; a jazz musician plays 3,000 chords to three people. The email went on to say “These players thought up new ways to take a long tonic chord and play other chords on top of it to take the harmony to a different place.”

As described by the ‘Completion Principle’, people almost involuntarily seek to complete that which is not complete.

When something is certain and known then we feel comfortable and in control. When something is not complete, we cannot close that item in our mind as we have to keep thinking about it.” (Changingminds.org)

Music itself is often a play on an audience’s desire for “completion,” hence the concept of “tension and release” introduced by common harmonic structures such as the II-V-I, which “resolves” back to the I chord (or the V-IV-I in blues).![]()

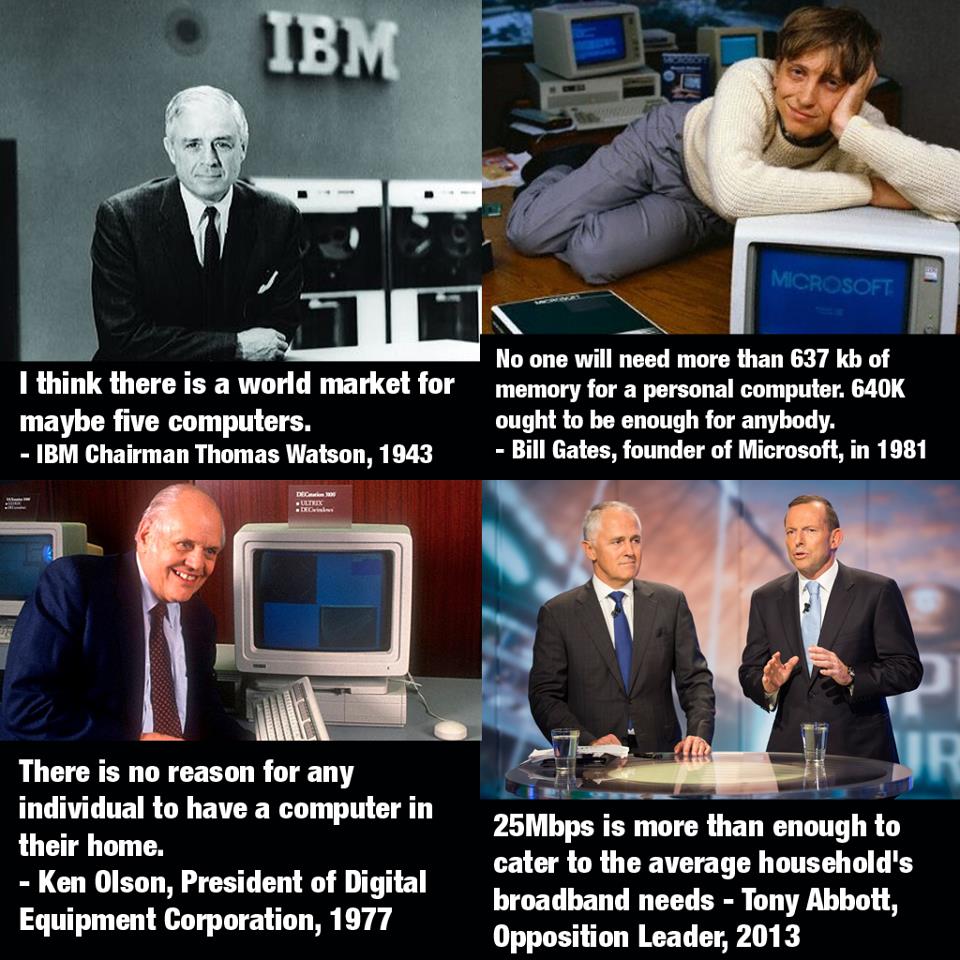

Interestingly, what is considered musically “acceptable” has changed over the years. From at least the early 18th century, one interval was actually referred to as the devil’s chord: “Diabolus in Musica” (the devil in music), what we now call the tritone or flat 5 (diminished 5th). Perhaps equivalently, it wasn’t that long ago that business software programs were often designed for “experts” rather than laymen, with little consideration to usability or intuitiveness. The concept of a “self-service business intelligence” product was almost an oxymoron.

Yet thinking about features and not about flow (workflow) is akin to thinking about scales and modes, and not about the underlying harmony and melody. Having a bunch of loosely coupled UIs or “studios” is a little like having a bunch of musicians playing who are playing for themselves and not each other, who are not in tune with the rest of the band.

It is certainly just as important to understand the form/structure of a tune as it is to understand the overall structure of a software solution. Usability design (UX) without an understanding of form and function (business needs/problems) is perhaps the equivalent of elevator music.

Once the form (concept, requirements) is/are well understood, however, then good developers can be trusted to improvise and innovate. Similarly with good musicians. Here is a tip I got from a great jazz guitar teacher, Jody Fisher (in relation to creating improvised lines between chords):

“You know, you can play almost ANYTHING to approach the next chord; you’d be surprised how freeing this can be…It works because any note has some relationship with any chord–of course it’s a matter of taste…”

“You know, you can play almost ANYTHING to approach the next chord; you’d be surprised how freeing this can be…It works because any note has some relationship with any chord–of course it’s a matter of taste…”

In our world, I think this has parallels with giving developers “freedom to create” (or “fail-fast”). “Fast failure” is the new culture driving innovation: being afraid to fail kills ideas. Interestingly, innovation means create-new (Chueng Xin) in Chinese.

What is more important, essence or perfection? Comparing waterfall vs agile development could be analogous to comparing classical vs jazz. Classical has detailed “requirements,” painstakingly developed via the score, which is played as written. Jazz is more free-form, a general idea/form (theme) which is then built upon through improvisation (iterations/sprints).

My friend Adam Rafferty, who studied with Mike Longo (former pianist and musical director for Dizzy Gillespie) ,recently wrote about how Mike described the difference between “How To Play” and “What To Play.”

| “How To Play” | “What to Play” |

|

|

Adam suggests:

- “What to play” can generally be written on paper in a book form – it’s “information” much like a cookbook.

- “How to play” is a bit more elusive…some chalk it up to “feeling” but it’s much more than emotion. It’s intuition and experience.

Similarly in software development. Concepts (harmony), such as “material design,” or architecture (form) such as the “MEAN stack” are the building blocks, but it takes intuition and experience to turn these into elegant software. In fact, it’s sometimes “how to play” can equate to “what not to play.” As Miles Davis once said “it’s not the notes you play; it’s the notes you don’t play.” In his book “Simplicity.” John Maeda comments:

“The simplest way to achieve simplicity is through thoughtful reduction” (John Maeda, ”The Laws of Simplicity”)

In my R&D lab we often talk about “simplifying complexity.” It’s much harder to make the complex seem simple than it is to make the simple overly complex. Hence the brilliance of someone like my favorite guitarist, Wes Montgomery.

The great Zen teacher Shunryu Suzuki wrote: “In the beginner’s mind there are many possibilities, but in the expert’s there are few.” Regardless of how much we learn or how expert we become, we can benefit from seeing ourselves as beginners. Keep an open mind. Think “outside of the box.”

To finish with an (alleged) quote from the infamous Yogi Berra:

“Anyone who understands jazz knows that you can’t understand it. It’s too complicated. That’s what’s so simple about it.”

And so it may be with good software design…

Dilbert ©2018, Universal Uclick

Dilbert ©2018, Universal Uclick

from Sydney to Los Angeles when the captain announced that there was a problem with the “onboard mainframe” and they were going to have to reboot. Now I had no idea that aircraft run on onboard mainframes…or whether it was actually a mainframe…or how to reboot one. Does one use CTRL-ALT-DEL? Do you just turn it off and back on again? Some wise guy onboard suggested loudly that they “Call Bill Gates.” I don’t know how much this Mr. Gates knows about mainframes. Not much it would seem, because after about two hours the captain announced that the mainframe wasn’t collaborating (he may have said cooperating, but at that point I was a little preoccupied), and the flight was cancelled.

from Sydney to Los Angeles when the captain announced that there was a problem with the “onboard mainframe” and they were going to have to reboot. Now I had no idea that aircraft run on onboard mainframes…or whether it was actually a mainframe…or how to reboot one. Does one use CTRL-ALT-DEL? Do you just turn it off and back on again? Some wise guy onboard suggested loudly that they “Call Bill Gates.” I don’t know how much this Mr. Gates knows about mainframes. Not much it would seem, because after about two hours the captain announced that the mainframe wasn’t collaborating (he may have said cooperating, but at that point I was a little preoccupied), and the flight was cancelled.

One analogy, which seemed to resonate during my session, was the idea that finding a business insight and sharing it with colleagues should be as easy as “taking a picture and posting it on Facebook.” The increasing complexity of the world we all work in is making it imperative for us to work collaboratively to solve business problems. The old world of silos and “information hoarding” is not effective, and the information needed to support business decision-making needs to be quickly accessible, wherever it resides, as well the business need to have a good payroll systems that allow them be competitive, and sometimes the best way to reach this is by outsource program, but

One analogy, which seemed to resonate during my session, was the idea that finding a business insight and sharing it with colleagues should be as easy as “taking a picture and posting it on Facebook.” The increasing complexity of the world we all work in is making it imperative for us to work collaboratively to solve business problems. The old world of silos and “information hoarding” is not effective, and the information needed to support business decision-making needs to be quickly accessible, wherever it resides, as well the business need to have a good payroll systems that allow them be competitive, and sometimes the best way to reach this is by outsource program, but

the BI industry in general, we’ve seen what Wayne Eckerson recently referred to as a “pendulum swing” – away from (over) governed BI to un-governed BI. The pendulum is now swinging back, because business users are now starting to ask questions like:

the BI industry in general, we’ve seen what Wayne Eckerson recently referred to as a “pendulum swing” – away from (over) governed BI to un-governed BI. The pendulum is now swinging back, because business users are now starting to ask questions like: I’m reminded of something I noticed on a Deep Purple record “Made in Japan”, recorded back in 1971. Ian Gillan, the vocalist, can be overheard asking the sound engineer: “Yeah everything up here please. A bit more monitor if you’ve got it.” To which Ritchie Blackmore, the guitarist, adds: “Can I have everything louder than everything else?”

I’m reminded of something I noticed on a Deep Purple record “Made in Japan”, recorded back in 1971. Ian Gillan, the vocalist, can be overheard asking the sound engineer: “Yeah everything up here please. A bit more monitor if you’ve got it.” To which Ritchie Blackmore, the guitarist, adds: “Can I have everything louder than everything else?”

been ‘anti-collaboration’. Which has, ironically, made the situation worse by encouraging users to find ‘work-arounds’, resulting in, for example, the proliferation of spreadsheets. As Boris Evelson of Forrester Research recently commented to me in an email on this topic, “We increasingly hear from our clients that BI silos are now proliferating. Basically these platforms are now becoming the new spreadsheets”

been ‘anti-collaboration’. Which has, ironically, made the situation worse by encouraging users to find ‘work-arounds’, resulting in, for example, the proliferation of spreadsheets. As Boris Evelson of Forrester Research recently commented to me in an email on this topic, “We increasingly hear from our clients that BI silos are now proliferating. Basically these platforms are now becoming the new spreadsheets”

To a greater or lesser extent, everyone collaborates. I watch my two-year-old twins collaborate daily. I listen to the “collaborative creativity” within a jazz ensemble. Yet so often in a work context we operate in silos.

To a greater or lesser extent, everyone collaborates. I watch my two-year-old twins collaborate daily. I listen to the “collaborative creativity” within a jazz ensemble. Yet so often in a work context we operate in silos. So what is Collaborative Business Intelligence? According to Howard Dresner, it is “a process where two or more people or organizations work together to develop a common understanding, which is shared and used to build consensus in support of organizational decision making.” Collaborative capabilities include sharing, annotating and co-authoring of business content.

So what is Collaborative Business Intelligence? According to Howard Dresner, it is “a process where two or more people or organizations work together to develop a common understanding, which is shared and used to build consensus in support of organizational decision making.” Collaborative capabilities include sharing, annotating and co-authoring of business content.

yield big improvements in unexpected areas. Be open-minded and willing to challenge perceptions. As Nolan Bushnell comments in his book “Finding the Next Steve Jobs”, Neutralize the Naysayers (“any idiot can say no”)

yield big improvements in unexpected areas. Be open-minded and willing to challenge perceptions. As Nolan Bushnell comments in his book “Finding the Next Steve Jobs”, Neutralize the Naysayers (“any idiot can say no”)

Connect

Connect with us on the following social media platforms.